TW sent me this piece of web marketing gold…

The ULTIMATE guide to linkbaiting. Building blog content to get traffic from Digg, Reddit, delicious, etc.

A friend in the UK sent me this. The number of searches for ‘facebook’ in the UK as just overtaken the number of searches for ‘myspace’. This has a history of being an excellent predictor and it’s showing that myspace is going to get beaten up by Facebook – at least in the UK market.

I found this awesome vid on Guy Kawasaki’s blog. It’s a panel session with Markus Frind, Founder, PlentyofFish.com

and James Hong, Co-Founder, HotorNot.com and a few others. Markus Frind is my personal hero and much of the reason I have an aversion to VC money.

This is more than an hour long, so when you’re done working tonight at 2am, crack a beer and enjoy this:

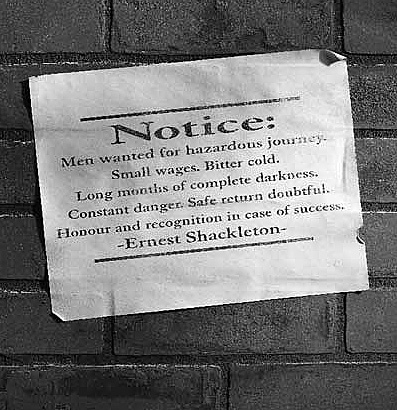

Someone emailed me this morning and in his email sig he has a derivative of what was supposedly an ad by Ernest Shackleton for his 1908 Nimrod Antarctic expedition:

I was intrigued, did a little googling and discovered that the ad seems to be a fake and the first published appearance of the “Men wanted for hazardous journey” ad is in a 1948 book by Julian Watkins “The 100 Greatest Advertisements” – published 40 years after the actual expedition.

Funny how this surfaced recently and Watkins never caught any flak for it in 1948. I’m curious what other literary murder authors got away with back then before information was set free.

I’m at open coffee this morning at Lousa’s Coffee shop in Seattle – here early to get some reading in. Come down if you’re free this morning. There’s going to be an awesome group of entrepreneurs and innovators here from 8:30 until everyone leaves (usually after 10:30).

I’ll often find myself chatting about choice of technology with fellow entrepreneurs and invariably it’s assumed the new web app is going to be developed in Rails.

I don’t know enough about Rails to judge it’s worth. I do know that you can develop applications in Rails very quickly and that it scales complexity better than Perl. Rails may have problems scaling performance. I also know that you can’t hire a Rails developer in Seattle for love or money.

So here are some things to think about when choosing a programming language and platform for your next consumer web business. They are in chronological order – the order you’re going to encounter each issue:

If you answered yes to all 5 of these, then you’ve made the right choice.

I use Perl for my projects, and it does fairly well on most criteria. It’s weakest is scaling to handle complexity. Perl lets you invent your own style of coding, so it can become very hard to read someone else’s code. Usually that’s solved through coding by convention. Damian Conway’s Object Oriented Perl is the bible of Perl convention in case you’re considering going that route.

“You don’t write because you want to say something: you write because you’ve got something to say.”

Next time I’ll have something to say.

I run two consumer web businesses. LineBuzz.com and Geojoey.com. Both have more than 50% of the app impelemented in Javascript and execute in the browser environment.

Something that occurred to me a while ago is that, because most of the execution happens inside the browser and uses our visitors CPU and memory, I don’t have to worry about my servers having to provide that CPU and memory.

I found myself moving processing to the client side where possible.

[Don’t worry, we torture our QA guru with a slow machine on purpose so she will catch any browser slowness we cause]

One down side is that everyone can see the Javascript source code – although it’s compressed which makes it a little harder to reverse engineer. Usually the most CPU intensive code is also the most interesting.

Another disadvantage is that I’m using a bit more bandwidth. But if the app is not shoveling vasts amount of data to do its processing and if I’m OK with exposing parts of my source to competitors, then these issues go away.

Moving execution to the client side opens up some interesting opportunities for distributed processing.

Lets say you have 1 million page views a day on your home page. That’s 365 Million views per year. Lets say each user spends an average of 1 minute on your page because they’re reading something interesting.

So that’s 365 million minutes of processing time you have available per year.

Converted to years, that’s 694 server years. One server working for 694 years or 694 servers working for 1 year.

But lets halve it because we haven’t taken into account load times or the fact that javascript is slower than other languages. So we have 347 server years.

Or put another way, it’s like having 347 additional servers per year.

The cheapest server at ServerBeach.com costs $75 per month or $900 per year. [It’s a 1.7Ghz Celeron with 512Megs RAM – we’re working on minimums here!]

So that translates 347 servers per year into $312,300 per year.

My method isn’t very scientific – and if you go around slowing down peoples machines, you’re not going to have 1 million page views per day for very long. But it gives you a general indication of how much money you can save if you can move parts of a CPU intensive web application to the client side.

So going beyond saving server costs, it’s possible for a high traffic website to do something similar to SETI@HOME and essentially turn the millions of workstations that spend a few minutes on the site each day into a giant distributed processing beowulf cluster using little old Javascript.

Since I relaunched my blog on Saturday I’ve had 432 page views. So if I’d been really smart and put AdSense on the blog…

At 0.30 CPC

with a 2% Click thru rate

I’d have earned a grand total of $2.59.

Almost the price of a latte.

UPDATE: I installed adsense. My goal: to earn one latte every 2 days, which is about how often I support the local coffee shop.

Google don’t want to do any evil, but they also don’t like free speech that much, so according to the adsense terms I’m not actually allowed to tell you if I earn enough for a latte. But if you see my posting rate drop then click a few ads, I’ll buy a coffee and output will improve.

In an earlier post I suggested that too much competitive analysis too early might be a bad idea. But it got me thinking about the tools that are available for gathering competitive intelligence about a business and what someone else might be using to gather data about my business.

One of my favorites! Use archive.org to see how your competitors website evolved from the early days until now. If they have a robots.txt blocking iarchive (archive.org’s web crawler) then you’re not going to see anything, but most websites don’t block the crawler. Here’s Google’s early home page from 1998.

For extra intel, combine Alexa with archive.org to find out when your competitors traffic spiked, and then look at their pages during those dates on Archive.org to try and figure out what they did right.

Site explorer is useful for seeing who’s linking to your competitor i.e. who you should be getting backlinks from.

Netcraft have a toolbar of their own. Take a look at the site rank to get an indication of traffic. Click the number to see who has roughly the same traffic. The page also shows some useful stuff like which hosting facility your competitor is using.

What interests me more than pagerank is the number of pages of content a website has and which of those are indexed and are ranking well. Search for ‘site:example.com’ on Google to see all pages that Google has indexed for a given website. Smart website owners don’t optimize for individual keywords or phrases, but instead provide a ton of content that Google indexes. They then work on getting a good overall rank for their site and getting search engine traffic for a wide range of keywords. I blogged about this recently on a friends blog and it’s called the long tail approach.

If I’m looking at which pages my competitor has indexed, I’m very interested in what specific content they’re providing. So often I’ll skip to result 900 or more and see what the bulk of their content is. You may dig up some interesting info doing this.

Technorati Rank, Links and Authority

If you’re researching a competing blog, use Technorati. Look at the rank, blog reactions (inbound links really) and the technorati authority. Authority is the number of blogs linking to the blog you’re researching in the last 6 months.

Sites like Alexa, Comscore and Compete are incredibly inaccurate and easy to game. Just read this piece by the CEO of plenty of fish. Alexa provides an approximation of traffic. It’s also subject to anomalies that throw the stats wildly off. Like the time that Digg.com overtook Slashdot.org in traffic. Someone on Digg posted an article about the win and all the Digg visitors went to Alexa to look at the stats and many installed the toolbar. The result was a big jump in Digg’s traffic according to Alexa when nothing had changed.

PageRank is only updated about once every 2 or more months. New sites could be getting a ton of traffic and have no pagerank, while older sites can have huge pagerank but very little content and only rank well for a few keywords. Install Google Toolbar to see pagerank for any site. You may have to enable it in advanced options.

This may get you blocked by your ISP and may even be illegal, so I’m just mentioning it for informational purposes and because this may be used on you. nmap is a port scanning tool that will tell you what services someone is running on their server, what operating system they’re running, what other machines are on the same subnet and so on. It’s a favorite used by hackers to find potential targets. It also has the potential to slow down or harm a server. It’s also quite easy to detect if someone is running this on your server and find out who they are. So don’t go and load this on your machine and run it.

Compete is basically an Alexa clone. I never use this site because I’ve checked sites that I have real data on and compete seems way off. They claim to provide demographics too, but if the basics are wrong, how can you trust the demographics.

I use unix command line whois, but you can use whois.net if you’re not a geek. We use a domain proxy service to preserve our privacy, but many people don’t. You’ll often dig up some interesting data in whois, like who the parent company of your competitor is, or who founded the company and is still the owner of the domain name. Try googling any corporation or personal names you find and you might come up with even more data.

HTML source of competitors site

Just take a glance at the headers and footers and any comments in the middle of the pages. Sometimes you can tell what server platform they’re running or sometimes a silly developer has commented out code that’s part of a yet unreleased feature.

Personal blogs of competitors and staff

If you’re researching linebuzz.com and you’re my competitor, then it’s a good idea to keep an eye on this blog. I sometimes talk about the technology we use and how we get stuff done. Same applies for your competitors. Google the founders and management team, find their blogs and read them regularly.

dig (not Digg.com)

dig is another unix tool that queries dns servers. Much of this data is available from netcraft.com mentioned above. But you can use dig to find out who your competitor uses for email with ‘dig mx example.com’ and you can do a reverse lookup on an ip address which may help you find out who their ISP is (netcraft gives you this)

Another useful thing that dig does is give you an indication how your competitor is set up for web hosting – if they’re using round-robin DNS or a single IP with a load sharer.

traceroute

Another unix tool. Run: ‘/usr/sbin/traceroute www.example.com’ and you’ll get a list of the path your traffic takes to get to your competitors servers. Look at the last few router hostnames before the final destination of the traffic. You may get data on which country and/or city your competitors servers are based in and which hosting provider they use. There’s a rather crummy web based traceroute here.

Set up Google news, blog and search alerts for both your competitors brands and your own because your competitors may mention you in a blog comment or somewhere else.

There is a lot more information available via SEC filings, Secretary of State websites and so on – perhaps the subject of a future entry.