There are many caching products, plugins and config suggestions for WordPress.org blogs and sites but I’m going to take you through the basic WordPress speedup procedure. This will give you a roughly 280% speedup and the ability to handle high numbers of concurrent visitors with little additional software or complexity. I’m also going to throw in a few additional tips on what to look out for down the road, and my opinion on database caching layers. Here goes…

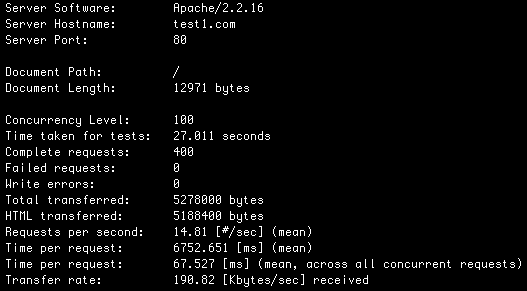

How Fast is WordPress out of the Box?

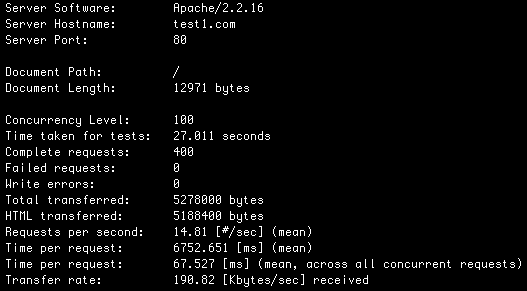

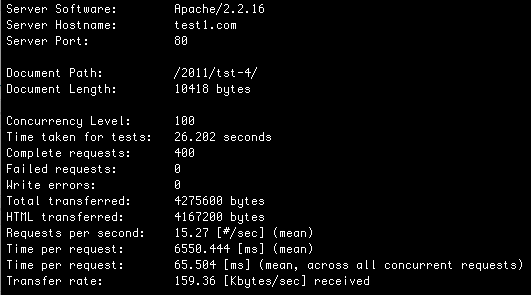

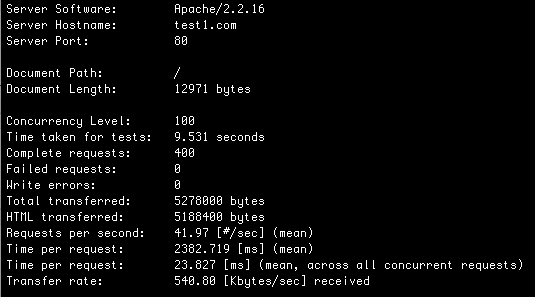

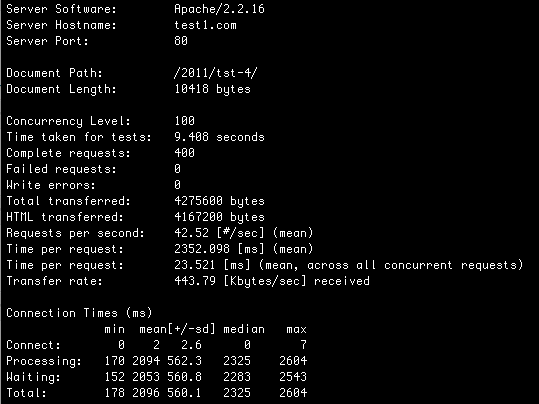

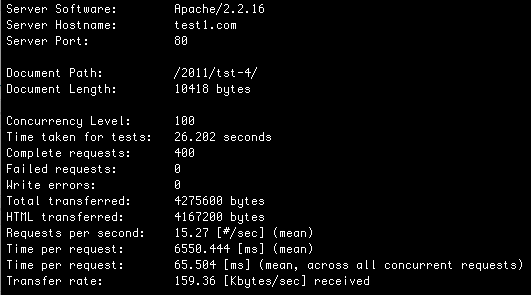

[HP/S = Home Page Hits per second and BE/S = Blog Entry Page Hits per Second]

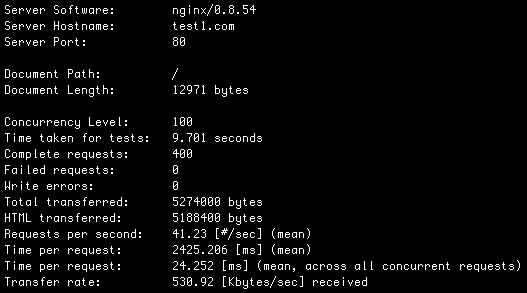

Lets start with a baseline benchmark. WordPress, out of the box, no plugins, running on a Linode 512 server will give you:

14.81 HP/S and 15.27 BE/S.

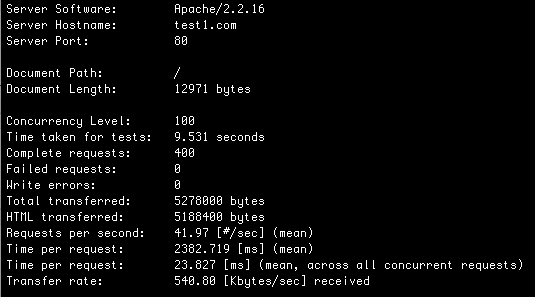

First add an op code cache to speed up PHP execution

That’s not bad. WordPress out of the box with zero tweaking will work great for a site with around 100,000 daily pageviews and a minor traffic spike now and then. But lets make a ridiculously simple change and add an op code cache to PHP by running the following command in the Linux shell as root on Ubuntu:

apt-get install php-apc

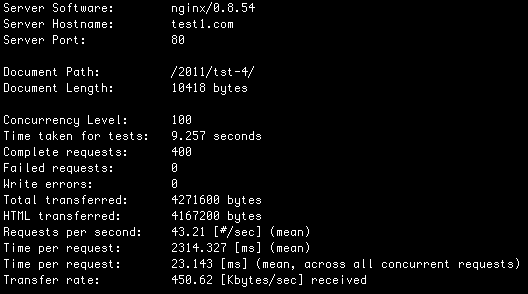

And lets check our benchmarks again:

41.97 HP/S and 42.52 BE/S

WOW! That’s a huge improvement. Lets do more…

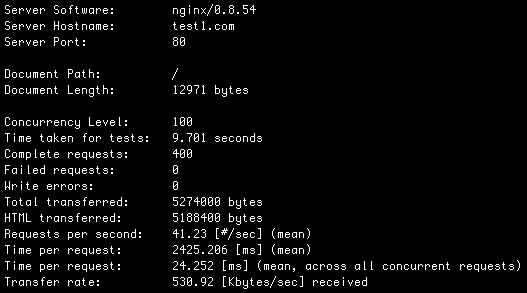

Then install Nginx to handle high numbers of concurrent visitors

Most of your visitors take time to load each page. That means they stay connected to Apache for as much as a few seconds, occupying your Apache children. If you have keep-alive enabled, which is a good thing because it speeds up your page load time, each visitor is going to occupy your Apache processes for a lot longer than just a few seconds. So while we can handle a high number of page views that are served up instantly, we can’t handle lots of visitors wanting to stay connected. So lets fix that…

Putting a server in front of Apache that can handle a huge number of concurrent connections with very little memory or CPU is the answer. So lets install Nginx and have it deal with lots of connections hanging around, and have it quickly connect and disconnect from Apache for each request, which frees up your Apache children. That way you can handle hundreds of visitors connected to your site with keep-alive enabled without breaking a sweat.

In your apache2.conf file you’ll need to set up the server to listen on a different port. I modify the following two lines:

NameVirtualHost *:8011

Listen 127.0.0.1:8011

#Then the start of my virtualhost sections also looks like this:

<VirtualHost *:8011>

In your nginx.conf file, the virtual host for my blog looks like this (replace test1.com with your hostname)

#Make sure keepalive is enabled and appears somewhere above your server section. Mine is set to 5 minutes.

keepalive_timeout 300;

server {

listen 80;

server_name .test1.com;

access_log logs/test.access.log main;

location / {

proxy_pass http://127.0.0.1:8011;

proxy_set_header host $http_host;

proxy_set_header X-Forwarded-For $remote_addr;

}

And that’s basically it. Other than the above modifications you can use the standard nginx.conf configuration along with your usual apache2.conf configuration. If you’d like to use less memory you can safely reduce the number of apache children your server uses. My configuration in apache2.conf looks like this:

<IfModule mpm_prefork_module>

StartServers 15

MinSpareServers 15

MaxSpareServers 15

MaxClients 15

MaxRequestsPerChild 1000

</IfModule>

With this configuration the blog you’re busy reading has spiked comfortably to 20 requests per second (courtesy of HackerNews) without breaking a sweat. Remember that Nginx talks to Apache only for a few microseconds for each request, so 15 apache children can handle a huge number of WordPress hits. The main limitation now is how many requests per second your WordPress installation can execute in terms of PHP code and database queries.

You are now set up to handle 40 hits per second and high concurrency. Relax, life is good!

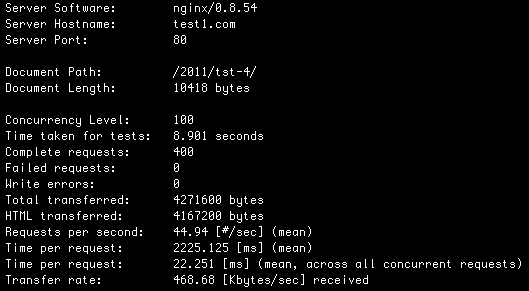

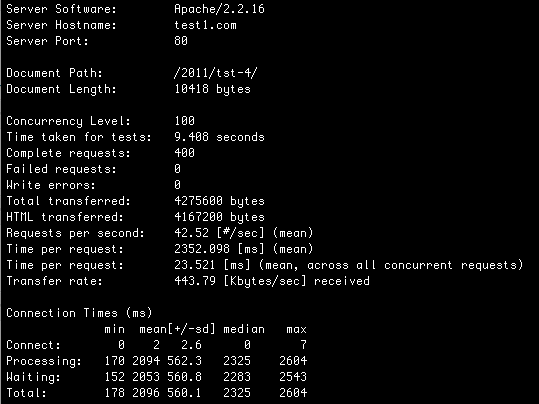

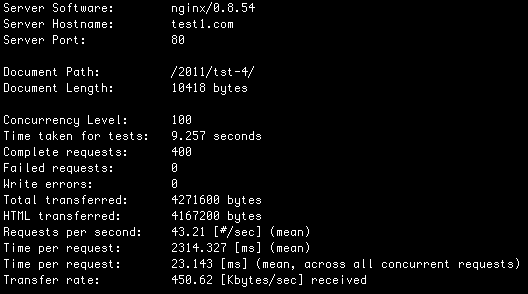

With Nginx on the front end and your op code cache installed, we’re clocking in at:

41.23 HP/S and 43.21 BE/S

We can also handle a high number of concurrent visitors. Nginx will queue requests up if you get a worst case scenario of a sudden spike of 200 people hitting your site. At 41.23 HP/S it’ll take under 5 seconds for all of them to get served. Not too bad for a worst case.

Compression for the dialup visitors

Latency, or the round trip time for packets on the Internet is the biggest slow down for websites (and just about everything else that doesn’t stream). That’s why techniques like keep-alive really speed things up because they avoid a three way handshake when visitors to your site establish their connections. Reducing the amount of data transferred by using compression doesn’t give a huge speedup for broadband visitors, but it will speed things up for visitors on slower connections. To add Gzip to your Nginx configuration, simply add the following to the top of your nginx.conf file:

gzip on;

gzip_min_length 1100;

gzip_buffers 4 8k;

gzip_types text/plain text/css application/x-javascript application/javascript text/xml application/xml application/xml+rss text/javascript;

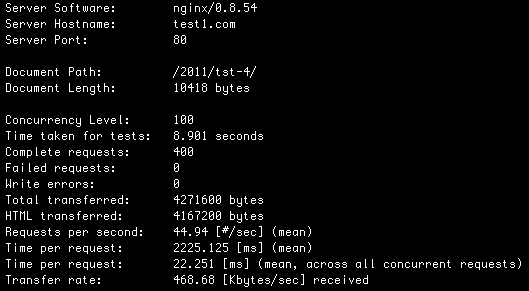

We’re still benchmarking at:

40.26 HP/S and 44.94 BE/S

What about a database caching layer?

The short answer is: Don’t bother, but make darn sure you have query caching enabled in MySQL.

Here’s the long answer:

I run WordPress on a 512 Linode VPS which is a small but popular configuration. Linode’s default MySQL configuration has 16M key buffer for MyISAM key caching and it has the query cache enabled with 16M available.

First I created a test Linode VPS to do some benchmarking. I started with a fresh WordPress 3.1.3 installation with no plugins enabled. I created a handful of blog entries.

Then I enabled query logging on the mysql server and hit the home page with a fresh browser with an empty cache. I logged all queries that WordPress used to generate the home page. I also hit refresh a few times to make sure there were no extra queries showing up.

I took the queries I saw and put them in a benchmarking loop.

I then did the same with a blog entry page – also putting those queries in a benchmark script.

Here’s the resulting script.

Benchmarking this on a default Linode 512 server I get:

447.9 home page views per second (in purely database queries).

374.14 blog entry page views per second (in purely database queries).

What this means is that the “problem” you are solving when adding a database caching layer to WordPress is the database’s inability to handle more than 447 home page views per second or 374 blog entry page views per second (on a Linode 512 VPS).

So my suggestion to WordPress.org bloggers is to forgo adding the complexity of a database caching layer and focus instead on other areas where real performance issues exist (like providing a web server that supports keep-alive and can also handle a high number of concurrent visitors – as discussed above).

Make sure there are two lines in your my.cnf mysql configuration file that read something like:

query_cache_limit = 1M

query_cache_size = 16M

If they’re missing your query cache is probably disabled. You can find your mysql config file at /etc/mysql/my.cnf on Ubuntu.

Footnotes:

Just for fun, I disabled MySQL’s query cache to see how the benchmarking script performed:

132.1 home page views per second (in DB queries)

99.6 blog entry page views per second (in DB queries)

Not too bad considering I’m forcing the db to look up the data for every query. Remember, I’m doing this on one of the lowest end servers money can buy. So how does this perform on a dedicated server with Intel Xeon E5410 processor, 4 gigs of memory and 15,000 rpm mirrored SAS drives? [My dev box for Feedjit 🙂 ]

1454.6 home page views per second

1157.1 blog entry page views per second

Should you use browser and/or server page caching?

Short answer: Dont’ do it.

You could force browsers to cache each page for a few minutes or a few hours. You could also generate all your wordpress pages into static content every few seconds or minutes. Both would give you a significant performance boost, but will hurt the usability of your site.

Visitors will hit a blog entry page, post a comment, hit your home page and return to the blog entry page to check for replies. There may be replies, but they won’t see them because you’ve served them a cached page. They may or may not return. You make your visitor unhappy and lose the SEO value of the comment reply they could have posted.

Heading into the wild blue yonder, what to watch out for…

The good news is that you’re now set up to handle big traffic spikes on a relatively small server. Here are a few things to watch out for:

Watch out for slow plugins, templates or widgets

WordPress’s stock installation is pretty darn fast. Remember that each plugin, template and widget you install executes it’s own PHP code. Now that your server is configured correctly, your two biggest bottlenecks that affect how much traffic you can handle are:

- Time spent executing PHP code

- Time spent waiting for the database to execute a query

Whenever you install a new plugin, template or widget, it introduces new PHP code and may introduce new database queries. Do one or all of the following:

- Google around to see if the plugin/widget/template has any performance issues

- Check the load graphs on your server for the week after you install to see if there’s a significant increase in CPU or memory usage or disk IO activity

- If you can, use ‘ab’ to benchmark your server and make sure it matches any baseline you’ve established

- Use Firebug, YSlow or the developer tools in Chrome or Safari (go to the Network panel) and check if any page component is taking too long to load. Also notice the size of each component and total page size.

Keep your images and other page components small(ish)

Sometimes you just HAVE to add that hi-res photo. As I mentioned earlier, latency is the real killer, so don’t be too paranoid about adding a few KB here and there for usability and aesthetics. But mind you don’t accidentally upload an uncompressed 5MB image or other large page component, unless that was your intention.

Make sure any Javascript is added to the bottom of your page or is loaded asynchronously

Javascript execution can slow down your page load time if it executes as the page loads. Unless a vendor tells you that their javascript executes asynchronously (without causing the page to wait), put their code at the bottom of the page or you’ll risk every visitor having to wait for that javascript to see the rest of your page.

Don’t get obsessive, it’s not healthy!

It’s easy to get obsessed with eeking out every last millisecond in site performance. Trust me, I’ve been there and I’m still in recovery. You can crunch your HTML, use CSS sprites, combine all scripts into a single script, block scrapers and Yahoo (hehe), get rid of all external scripts, images and flash, wear a woolen robe, shave your head and only eat oatmeal. But you’ll find you hit a point of diminishing returns and the time you’re spending preparing for those traffic spikes could be better spent on getting the traffic in the first place. Get the basics right and then deal with specific problems as they arise.

“We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil” ~Donald Knuth

Conclusion

The two most effective things you can do to a new WordPress blog to speed it up are to add an op code cache like APC, and to configure it to handle a high number of concurrent visitors using Nginx. Nothing else in my experience will give you a larger speed and concurrency improvement. Please let me know in the comments if you’ve found another magic bullet or about any errors or omissions. Thanks.