In an earlier post I suggested that too much competitive analysis too early might be a bad idea. But it got me thinking about the tools that are available for gathering competitive intelligence about a business and what someone else might be using to gather data about my business.

Archive.org

One of my favorites! Use archive.org to see how your competitors website evolved from the early days until now. If they have a robots.txt blocking iarchive (archive.org’s web crawler) then you’re not going to see anything, but most websites don’t block the crawler. Here’s Google’s early home page from 1998.

For extra intel, combine Alexa with archive.org to find out when your competitors traffic spiked, and then look at their pages during those dates on Archive.org to try and figure out what they did right.

Yahoo Site Explorer

Site explorer is useful for seeing who’s linking to your competitor i.e. who you should be getting backlinks from.

Netcraft Site Report

Netcraft have a toolbar of their own. Take a look at the site rank to get an indication of traffic. Click the number to see who has roughly the same traffic. The page also shows some useful stuff like which hosting facility your competitor is using.

Google pages indexed

What interests me more than pagerank is the number of pages of content a website has and which of those are indexed and are ranking well. Search for ‘site:example.com’ on Google to see all pages that Google has indexed for a given website. Smart website owners don’t optimize for individual keywords or phrases, but instead provide a ton of content that Google indexes. They then work on getting a good overall rank for their site and getting search engine traffic for a wide range of keywords. I blogged about this recently on a friends blog and it’s called the long tail approach.

If I’m looking at which pages my competitor has indexed, I’m very interested in what specific content they’re providing. So often I’ll skip to result 900 or more and see what the bulk of their content is. You may dig up some interesting info doing this.

Technorati Rank, Links and Authority

If you’re researching a competing blog, use Technorati. Look at the rank, blog reactions (inbound links really) and the technorati authority. Authority is the number of blogs linking to the blog you’re researching in the last 6 months.

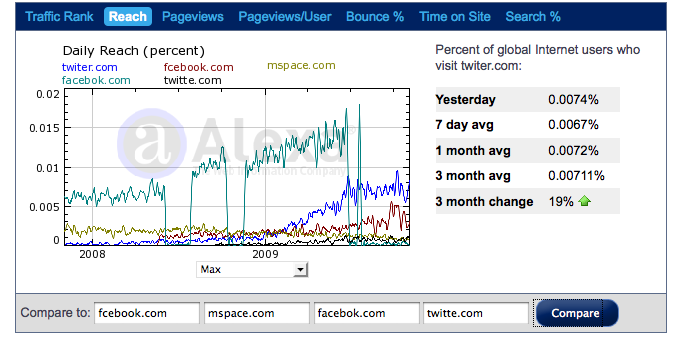

Alexa

Sites like Alexa, Comscore and Compete are incredibly inaccurate and easy to game. Just read this piece by the CEO of plenty of fish. Alexa provides an approximation of traffic. It’s also subject to anomalies that throw the stats wildly off. Like the time that Digg.com overtook Slashdot.org in traffic. Someone on Digg posted an article about the win and all the Digg visitors went to Alexa to look at the stats and many installed the toolbar. The result was a big jump in Digg’s traffic according to Alexa when nothing had changed.

Google PageRank

PageRank is only updated about once every 2 or more months. New sites could be getting a ton of traffic and have no pagerank, while older sites can have huge pagerank but very little content and only rank well for a few keywords. Install Google Toolbar to see pagerank for any site. You may have to enable it in advanced options.

nmap

This may get you blocked by your ISP and may even be illegal, so I’m just mentioning it for informational purposes and because this may be used on you. nmap is a port scanning tool that will tell you what services someone is running on their server, what operating system they’re running, what other machines are on the same subnet and so on. It’s a favorite used by hackers to find potential targets. It also has the potential to slow down or harm a server. It’s also quite easy to detect if someone is running this on your server and find out who they are. So don’t go and load this on your machine and run it.

Compete

Compete is basically an Alexa clone. I never use this site because I’ve checked sites that I have real data on and compete seems way off. They claim to provide demographics too, but if the basics are wrong, how can you trust the demographics.

whois

I use unix command line whois, but you can use whois.net if you’re not a geek. We use a domain proxy service to preserve our privacy, but many people don’t. You’ll often dig up some interesting data in whois, like who the parent company of your competitor is, or who founded the company and is still the owner of the domain name. Try googling any corporation or personal names you find and you might come up with even more data.

HTML source of competitors site

Just take a glance at the headers and footers and any comments in the middle of the pages. Sometimes you can tell what server platform they’re running or sometimes a silly developer has commented out code that’s part of a yet unreleased feature.

Personal blogs of competitors and staff

If you’re researching linebuzz.com and you’re my competitor, then it’s a good idea to keep an eye on this blog. I sometimes talk about the technology we use and how we get stuff done. Same applies for your competitors. Google the founders and management team, find their blogs and read them regularly.

dig (not Digg.com)

dig is another unix tool that queries dns servers. Much of this data is available from netcraft.com mentioned above. But you can use dig to find out who your competitor uses for email with ‘dig mx example.com’ and you can do a reverse lookup on an ip address which may help you find out who their ISP is (netcraft gives you this)

Another useful thing that dig does is give you an indication how your competitor is set up for web hosting – if they’re using round-robin DNS or a single IP with a load sharer.

traceroute

Another unix tool. Run: ‘/usr/sbin/traceroute www.example.com’ and you’ll get a list of the path your traffic takes to get to your competitors servers. Look at the last few router hostnames before the final destination of the traffic. You may get data on which country and/or city your competitors servers are based in and which hosting provider they use. There’s a rather crummy web based traceroute here.

Google alerts

Set up Google news, blog and search alerts for both your competitors brands and your own because your competitors may mention you in a blog comment or somewhere else.

There is a lot more information available via SEC filings, Secretary of State websites and so on – perhaps the subject of a future entry.